No Chromecast? No Problem! Litecast case study

Allow me start by expressing just how much I love watching movies and TV Shows (including wrestling and random YouTube videos) on my TV while lying down in bed. I used to have a wireless mouse and keyboard neaer me all the time to control what I watched without having to go directy to my desk; this is no longer the case and my TV isn’t a smart one nor do I have a device I’ve always wanted but I don’t have the budget for yet: a Chromecast.

Casting media from my little smartphone changed the way I consumed media since I first tried the Chromecast back in 2016, now I decided that enough was enough! I was about to spend 600 dollars on a Chormecast TV 4K, yet the curious developer in me told me:

What if I could just build my own (media) URL casting mobile app with remote control action buttons?

Enter Litecast

Litecast is just the first name that came to mind when it was time to name my project, I didn’t even know what exactly I wanted to do for the server part (the software that listens to cast and control instructions from the mobile app). I explored 3 methods in order and settled for the 3rd:

- Chrome Extension: Since I consume all my media with Google Chrome, why not build my first ever extension, right? All I had to do was use the background service worker script (

background.js) to listen for messages from the content script (content.js), which in turn would listen to websocket events sent through the mobile app. - Native desktop app: I remember using Electron to create Chromium based desktop apps back in the day, and I wanted to maybe use its integrated web viewer to display the casted URLs or control my main browser through low level commands; I didn’t know how but I wanted to. Turns out this was way out of the question… too much work for what could essentially be achieved with:

- A NodeJS script: This was perfect for what I had in mind, I could connect to my websocket channel and immediately after launch a Chrome instance with remote debugging enabled through a library called Puppeteer. Lastly. if I ever got tired of running the script manually from the terminal I could just create a

.desktopentry (on Linux it’s the equivalent of a desktop shortcut that runs a command) and give it a nice icon, maybe even tell it to not display the underlying terminal window.

Sequence diagram

Here’s a little diagram I made to (hopefully) convey my idea in a visual manner:

What went wrong with the extension

The first complication with the extension was that I tried to run the websocket subscription inside the background web worker script, but it turns out it sleeps after a few seconds and can only be woken up with specific hacks or via messages from the other scripts.

Moving it to the content script meant that one subscription would be made on every tab I opened and re-made after navigating to a different page, which I solved with a few if statements and an active tab query but then I ran into the last complication:

Google Chrome has a security policy that disallows certain events from being fired without user action. This meant that I could not trigger full screen remotely, and I think I would also find complications while interacting with elements inside iframes.

Choosing the websocket service

This component is very trivial yet very important since it’ll allow communication between a client (the mobile app) and a server (the script running commands on Chrome through Puppeteer). I always wanted to try a service called Pusher, saving me the hassle of setting up my own websocket server either on a local machine or on a cloud VPS.

The workflow is pretty simple, the client library listens to events on one or many channels:

var channel = pusher.subscribe('my-channel');

channel.bind('my-event', function(data) {

alert('Received my-event with message: ' + data.message);

});And the server library is used to send messages through channels:

pusher.trigger('my-channel', 'my-event', {

"message": "hello world"

});Once you sign up for free and create an app, you are given some credentials (I obscured the last 4 and 8 characters of the app key and secret respectively):

app_id = "1772867"

key = "26e94a4fff723c77XXXX"

secret = "c1b04497944XXXXXXXX"

cluster = "us3"The app key and secret should be kept private as environmental variables (or obscured in any way possible) because if you accidentally share them, nobody stops others from abusing your account.

So what if I want my own websocket server?

Then all power to you! I was also thinking on doing the same down the line. If you choose this approach there is a huge benefit and a small drawback:

- If you send a web socket message through a server running on a machine that is connected to the same network (wifi most likely) as the mobile app, you will experience almost zero latency between commands.Compared to Pusher where it has to go through a server in your cluster of choice (I live in the west coast, that’s why I chose

US3, the closest to me) and then back to your puppeteer server script. I can tell you from personal experience that the latency I experience is within the realm of 100ms and 1 full second; only once I experienced a 3 second delay. - The drawback is that if you do run, let’s say, SocketIO in your local network, the mobile app needs to know which IP to connect to which can be a huge hassle if your router/moden gives you a different IP address every time you reset your connection. I’m lucky that mine is always

192.168.0.2but others might not be so lucky. There is no easy solution to this problem but a good opportunity to learn networking with your mobile app language of choice (Dart, Java, Kotlin, JS/TS, Swift, etc).

Yes, you can have the web socket server and the puppeteer code coexist in the same script. Just like how my Pusher code is in the same file as the Puppeteer code.

Building the client (mobile app)

From here on out this case study will almost feel like a tutorial but trust me, it’s not. I will omit as much uncessary code as possible, but if you want to see the full thing, wait until I post the Github repo at the very start of the article in the form of a blue update note.

First thing I did was decide that I wanted to start learning Flutter the hard (and correct) way: by doing. And since I own an android I chose it as the only platform (with Java as its language). Then, I tried finding a reliable Pusher library for Dart (3.0 compatible) but found absolutely none that worked, so I went and created my own class to send HTTP requests to its API. You can find it on my Gist account but I’ll also paste it here (partially):

// pusher.dart

import 'package:http/http.dart' as http;

import 'package:crypto/crypto.dart';

import 'dart:convert';

class Pusher {

// Replace -us3 with -YOUR_CLUSTER_REGION

static String baseUrl = "https://api-us3.pusher.com/apps";

// Constructor and 3 "final" String properties: appId, appKey, secret

String getFullUrl(String channel, String event, String bodyStr) {

// Returns the URL with all its components, including authentication

// with MD5 + SHA256 HMAC encryption, as documented here:

// https://pusher.com/docs/channels/library_auth_reference/rest-api/#generating-authentication-signatures

}

Future<int> triggerEvent(String channel, String event, Map data) async {

// Sends an HTTP POST request to the Pusher HTTP API, the URL is assembled

// through the getFullUrl method, and the JSON body has the following schema:

// { name: string, channel: string, data: json | string } as docmented here:

// https://pusher.com/docs/channels/library_auth_reference/rest-api/

}

}Designing the UI

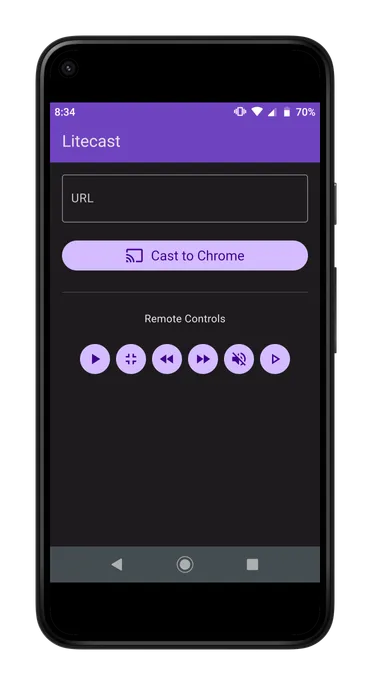

From the very start I had an idea of how I wanted my (extremely simple) UI to look like, and here’s the final result for the first version:

After a quick inspection, can you infer what the purpose of this app is? Very simple:

- Send URLs to the puppeteer Chrome instance and navigate to them automatically on the first tab (or can be the active tab, depends on you really).

- Send key press (and combinations such as

Shift+Nfor Youtube’s next video instruction) commands to the server script so that puppeteer runs the necessary key press/up/down functions. Why? Because usually most respectable media services have keyboard shortcuts to interact with the video playback. The best examples are Youtube, Twitch, and Netflix where you can pressSpaceorkto play and pause the video; pressmto mute and unmute the audio; pressfto trigger full-screen (what failed in the Chrome Extension);ArrowUpandArrowDownto change the volume; etc.

Anyway, here’s the code just for the UI, with no actions or functionality yet:

// main.dart (can be found inside lib/ on your newly created flutter project)

import 'package:flutter/material.dart';

void main() {

runApp(const MyApp());

}

class MyApp extends StatelessWidget {

const MyApp({super.key});

@override

Widget build(BuildContext context) {

return MaterialApp(

title: 'Litecast',

debugShowCheckedModeBanner: false,

theme: ThemeData(

colorSchemeSeed: Colors.deepPurple,

useMaterial3: true,

brightness: Brightness.dark,

),

home: const Home(title: 'Litecast'),

);

}

}

class Home extends StatefulWidget {

const Home({super.key, required this.title});

final String title;

@override

State<Home> createState() => _HomeState();

}

class _HomeState extends State<Home> {

TextEditingController urlTEC = TextEditingController();

@override

void initState() {

super.initState();

}

@override

void dispose() {

urlTEC.dispose();

super.dispose();

}

@override

Widget build(BuildContext context) {

return Scaffold(

resizeToAvoidBottomInset: false,

appBar: AppBar(

backgroundColor: Theme.of(context).colorScheme.inversePrimary,

title: Text(widget.title),

),

body: Padding(

padding: const EdgeInsets.symmetric(

horizontal: 16.0,

vertical: 16.0,

),

child: Column(

mainAxisAlignment: MainAxisAlignment.start,

children: <Widget>[

TextField(

controller: urlTEC,

decoration: const InputDecoration(

border: OutlineInputBorder(),

labelText: 'URL',

hintText: 'Enter the URL to cast.',

),

),

const SizedBox(height: 20),

FilledButton(

onPressed: () { },

style: FilledButton.styleFrom(

textStyle: const TextStyle(

fontSize: 18,

),

),

child: const Row(

mainAxisAlignment: MainAxisAlignment.center,

children: [

Icon(Icons.cast),

SizedBox(width: 10),

Text('Cast to Chrome'),

],

),

),

const Divider(height: 50),

const Text('Remote Controls'),

const SizedBox(height: 20),

Row(

mainAxisAlignment: MainAxisAlignment.center,

children: [

IconButton.filled(

icon: const Icon(Icons.play_arrow),

onPressed: () { },

tooltip: 'Play/Pause (Main)',

),

IconButton.filled(

icon: const Icon(Icons.fullscreen_exit),

onPressed: () { },

tooltip: 'Toggle Full Screen',

),

IconButton.filled(

icon: const Icon(Icons.fast_rewind),

onPressed: () { },

tooltip: 'Rewind',

),

IconButton.filled(

icon: const Icon(Icons.fast_forward),

onPressed: () { },

tooltip: 'Forward',

),

IconButton.filled(

icon: const Icon(Icons.volume_off),

onPressed: () { },

tooltip: 'Mute/Unmute',

),

IconButton.filled(

icon: const Icon(Icons.play_arrow_outlined),

onPressed: () { },

tooltip: 'Play/Pause (Alternative)',

),

],

),

],

),

),

);

}

}You will notice 2 key things here.

One is that the URL text field’s state is managed through a TextEditingController, this allows the controller to store whatever text the field has every time the user types on it.

If you’ve ever experienced Angular’s two-way data binding you’ll understand how this differs from React’s approach which is updating local state during an onChange() event.

The other thing to notice is that each button has empty onPressed callbacks.

Don’t worry we’ll add them all after the rest of the code is done.

Adding functionality to the UI

The first bit of functionality will be instantating a Pusher object (refer back to the previous section); or if you choose your own websockets server, the boilerplate code to allow Flutter to send messages through it (for example, using SocketIO for Dart).

// main.dart

import 'package:litecast/pusher.dart';

// ...

class _HomeState extends State<Home> {

// You could also use a dotenv approach to avoid having these

// in your source code: https://pub.dev/packages/flutter_dotenv

Pusher pusher = const Pusher(

appId: '1772867',

appKey: '26e94a4fff723c77XXXX',

secret: 'c1b044979444XXXXXXXX',

);

// ...

}Next, we add 2 functions, one to send an URL casting event, and another to send a keyboard press event.

// main.dart

// ...

class _HomeState extends State<Home> {

// ... pusher, initState(), dispose()

void castUrl() async {

String url = urlTEC.text;

// Maybe validate the URL here later?

Map data = {'url': url};

int resCode = await pusher.triggerEvent('litecast', 'cast', data);

if (resCode == 200) {

urlTEC.text = '';

} else {

// Handle failure your way

}

}

void sendKeyPress(String key) async {

Map data = {'key': key};

await pusher.triggerEvent('litecast', 'keyboard', data);

}

// ...

}If you decide to use SocketIO, the code might look more like handling a call to socket.emit() instead of an HTTP request to the Pusher API.

Also notice how the object I’m sending to both events only have 1 key-value pair instead of just the URL string.

Who knows maybe in the future I need to add more fields.

Also, the code is DRY for now, it could easily be refactored into 1 method that accepts a map, an event name, and a failure handler (if we need different ways to handle failure for each kind of command).

Now it’s time to attach these handlers to the UI buttons. In the following code block I put the callback functions in order of appearance, just replace the existing empty ones:

// For the 'Cast to Chrome' button (FilledButton)

onPressed: () => castUrl()

// For each control button (IconButton.filled)

// Play/Pause (Main)

onPressed: () => sendKeyPress(' ') // Space

// Toggle Full Screen

onPressed: () => sendKeyPress('f')

// Rewind

onPressed: () => sendKeyPress('ArrowLeft')

// Forward

onPressed: () => sendKeyPress('ArrowRight')

// Mute/Unmute

onPressed: () => sendKeyPress('m')

// Play/Pause (Alternative)

onPressed: () => sendKeyPress('k')If you are not sure what key to send, here’s a site that tells you the name of the keys you press: https://www.toptal.com/developers/keycode (refer to event.key).

Alternatively, Puppeteer has a reference of keys you can press (string constants): https://pptr.dev/api/puppeteer.keyinput

Making use of Android’s “Share Intent”

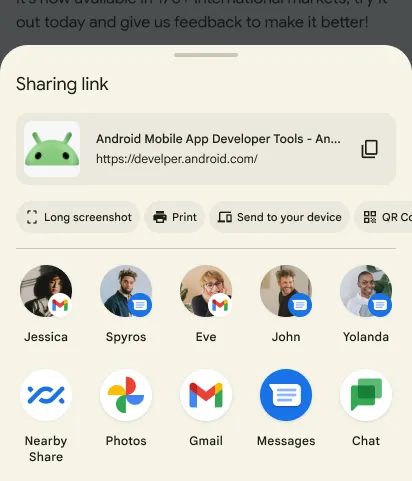

When I was building the UI I thought to myself, wouldn’t it be nice if I could just press the share button on my media app of choice (Netflix, YouTube, Crunchyroll, etc) and my app were to appear in the list of apps to share the link through?

Well, that’s what a share intent is; I discovered that after browsing the Flutter docs.

The idea is that once the link of whatever it is I’m watching on my Android phone is “shared” through my app, the app would resume with the URL already inside the text field ready for me to cast it. Or alternatively, cast it right away if it’s valid skipping the need to click the button. Plus, thanks to the way intents work, if the app is not open, it starts it, but ideally you’d want it to have it open in the background to avoid wasting time.

Note for iOS devs: I don’t have an iPhone to test this Flutter plugin, plus it requires more work and code than Android so use it only if you really feel like trying. It leverages the iOS 8 share sheet I presume.

The first step (I followed this nice tutorial and adapted it to my use case) is to add a intent-filter entry to the Manifest XML file (on the native side) located at android/app/src/main/AndroidManifes.xml:

<manifest xmlns:android="http://schemas.android.com/apk/res/android">

<application ...>

<activity ...>

<!-- ... -->

<!-- Allows text sharing through the share dialog -->

<intent-filter>

<action android:name="android.intent.action.SEND" />

<category android:name="android.intent.category.DEFAULT" />

<data android:mimeType="text/plain" />

</intent-filter>

</activity>

<!-- ... -->

</application>

<!-- ... -->

</manifest>What this little piece of code does is allow your application to appear in the list of apps that you can use to share data with.

Now all we need is to tell the Android platform what to do when the user actually performs this sharing intent.

For that, we need to modify the main activity’s Java code, usually located at android/app/main/java/<PACKAGE PATH>/MainActivity.java.

package com.kozmiclblog.litecast;

// Your IDE will automatically add the necessary imports, hopefully

public class MainActivity extends FlutterActivity {

private static final String CHANNEL = "app.channel.shared.data";

private String sharedText;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

handleSendText(getIntent());

}

@Override

protected void onNewIntent(@NonNull Intent intent) {

super.onNewIntent(intent);

handleSendText(intent);

}

@Override

public void configureFlutterEngine(@NonNull FlutterEngine flutterEngine) {

GeneratedPluginRegistrant.registerWith(flutterEngine);

new MethodChannel(flutterEngine.getDartExecutor().getBinaryMessenger(), CHANNEL)

.setMethodCallHandler(

(call, result) -> {

if (call.method.contentEquals("getSharedText")) {

result.success(sharedText);

sharedText = null;

}

}

);

}

void handleSendText(@NonNull Intent intent) {

String action = intent.getAction();

String type = intent.getType();

if (Intent.ACTION_SEND.equals(action) && type != null) {

if ("text/plain".equals(type)) {

sharedText = intent.getStringExtra(Intent.EXTRA_TEXT);

}

}

}

}Scary code? Not really! Here’s the explanation, I hope it makes sense:

- A method called

handleSendTextis in charge of processing intents of typeACTION_SENDwhich get triggered when we share links through apps. If the intent that gets passed through it happens to be the right one, it mutates a property calledsharedTextwhich contains the URL/text that was shared. - When the app is launched, and thus, the

onCreatelifecycle method called, it will call the aforementioned method. Obviously it will only do something if the app was opened by the sharing action and not by the user clicking on the corresponding launcher icon which would makegetIntent()return an intent of typenull. - If the app is already running and another link is shared through it, the

onNewIntentmethod will get called which does the same thing: call thehandleSendTextmethod. - Finally, a special method

configureFlutterEngineis used to create a communication channel between the Dart code and the underlying native Java Android platform. This channel will receive a message calledgetSharedTextand when it does, send back whatever is stored in thesharedTextstring property. Then it’s up to the flutter side to process this string.

On the flutter side however, 3 things need to be added: a handler for when the app is resumed via a shared intent while the app is already running, the communication channel to request the shared URL text, and the method that will process the string sent back by the communication channel:

// main.dart

// ...

import 'package:flutter/services.dart';

// ...

class _HomeState extends State<Home> {

static const platform = MethodChannel('app.channel.shared.data');

// ...

@override

void initState() {

super.initState();

// Initialize the 'resume app' listener and ask for the shared text

_init();

}

_init() async {

SystemChannels.lifecycle.setMessageHandler((msg) async {

if (msg != null && msg.contains('resumed')) {

await getSharedText();

}

return null;

});

await getSharedText();

}

// ...

Future<void> getSharedText() async {

var sharedData = await platform.invokeMethod('getSharedText');

if (sharedData != null) {

urlTEC.text = sharedData;

}

}

}Once this code is added the client is pretty much completed, it just needs finishing touches, more error handling, validation, and maybe even a code refactor.

The only complex part of this code is the call to SystemChannels.lifecycle.setMessageHandler, all it does is listen for when the app is resumed and when it does, get whatever text is stored in the native MainActivity’s sharedText property.

Remember that it can and will be null if nothing has been shared yet or if the flutter side already received a valid string (remember this Java line: sharedText = null; inside the configureFlutterEngine method).

Coding the server script

The server script is a simple NodeJS project so we’ll start by running the npm init command with the usual defaults and then install the following npm packages with npm install --save <PACKAGE NAME>:

puppeteer: The library responsible for connecting to a Chrome instance with remote debugging and sending instructions to it. Normally it is used for complex web scraping, automatization, and end-to-end testing but I’m gonna use it to empower my glorified remote control app.pusher-js: The Javascript client library that I chose to subscribe to mylitecastchannel and process the events with Puppeteer. As I mentioned, maybe in the future I change it to SocketIO.

The project structure is simple (for now):

.

├── [ignored] node_modules

├── constants.mjs

├── helpers.mjs

├── index.mjs

├── package.json

└── package-lock.jsonThe first file I’m gonna focus on is helpers.mjs.

As a reminder, the .mjs extension allows me to use the import syntax.

If this changed and I no longer need the m part then I must be living under a rock… send me an email if you know something about it :).

/**

* @param { number } ms - The amount of milliseconds to wait

* @return { Promise<void> } A void promise that resolves in time

*/

export function sleep(ms) {

return new Promise((resolve) => {

setTimeout(() => resolve(), ms);

});

}Yes I’m not joking, for now (version 1.0) this is all I need. This function will allow me to wait for certains thing to complete when a library won’t provide me a reliable promise method/function such as Puppeteer’s Page.waitForSelector.

The next file I want to show you is constants.mjs, and as I already mentioned twice, you can choose to install the dotenv npm package instead of hardcoding your credentials inside the source code.

Notice how you don’t need your Pusher app secret to listen to events, only to trigger them.

export const CHROME_PATH = "/opt/google/chrome/chrome";

export const DEBUG_PORT = "21222";

export const PUSHER_APP_KEY = '26e94a4fff723c77XXXX';

export const PUSHER_CLUSTER = 'us3';

export const PUSHER_CHANNEL = 'litecast';And last, the main course; index.mjs which contains all the code for version 1.0.

// Imports, you know a proper IDE auto-imports stuff anyway :)

let browserProcess = spawn(CHROME_PATH, [`--remote-debugging-port=${DEBUG_PORT}`]);

await sleep(3000); // Wait for the browser to properly load

console.log("Now listening for commands...");

const pusher = new Pusher(PUSHER_APP_KEY, {

cluster: PUSHER_CLUSTER

});

const channel = pusher.subscribe(PUSHER_CHANNEL);

browserProcess.on('close', () => {

pusher.disconnect();

console.log("Goodbye | Browser closed and Pusher disconnected");

});

try {

const b = await puppeteer.connect({

browserURL: `http://127.0.0.1:${DEBUG_PORT}`,

defaultViewport: null

});

const [page] = await b.pages();

channel.bind('cast', async ({ url }) => {

if (url !== undefined && url.startsWith('http')) {

await page.goto(url);

console.log(`Navigated to ${url}`);

} else {

console.log('Invalid or missing URL in Cast event data');

}

});

channel.bind('keyboard', async ({ key }) => {

await page.keyboard.press(key);

});

} catch (err) {

console.error(`Puppeteer Initialization Failed | ${err.message}`);

}Let’s explain the code from top to bottom.

We need a Google Chrome instance that has remote debugging enabled, for that we need to either launch it manually (with the --remote-debugging-port flag set to 21222) from the terminal, or let the script launch it with NodeJS’s child-process module that comes in the standard library.

Next, I decided to give the browser 3 seconds to properly load and get ready to allow connections from Puppeteer. Without waiting I experienced a bug so I didn’t want to take any chances. Once the 3 seconds pass the script subscribes to the appropriate Pusher channel and then tells the browser process that if it gets killed (by closing it), unsubscribe from the channel so that the script can finally exit (otherwise it keeps running).

Finally, it attempts to make Puppeteer establish a connection to the spawned Chrome instance (if it fails, it just tells me what went wrong).

Now it’s time to grab the first tab (if it gets closed intentionally or by accident, the program will break) but there is also the option of grabbing the active tab whenever a call to any Pupeteer method that is invoked on a Page is performed.

The only downside is that it may introduce additional latency but nothing too noticeable, here’s the code I found that shows me how it’s done:

async function getActiveTab(browser) {

const pages = await browser.pages();

for (const page of pages) {

const state = await page.evaluate(() => document.visibilityState);

// One of these pages is bound to be the one that is visible so I

// think there is no way of reaching the error throw line.

if (state === 'visible') return page;

}

Promise.reject(

new Error('There is no active tab for some weird reason.')

);

}The last thing to observe is the 2 channel bind method calls that listen to cast and keyboard events.

I already explained what they do so it comes to no surprise that upon receiving cast events, all the script has to do is check if the URL is valid, and if so, navigate to it using Page.goto(<URL>).

Keyboard events are also simple if only 1 key needs to be pressed.

We can achieve this with (Keyboard | EelementHandle).press(<KEY>, <OPTIONS?>).

The press method encompasses 2 events: keydown followed by keyup, but if we want to simulate a keyboard shortcut here’s how it’s done:

await page.keyboard.down('ShiftLeft'); // or just 'Shift'

await page.keyboard.press(THE_KEY); // e.g: 'n' for <Shift + N>

await page.keyboard.up('ShiftLeft');Let’s take it for a spin

The only thing left now is pray this thing works as I intended. To run the server script I can just navigate to my project’s directory and then:

node index.mjsHere’s a short screen recording I made to test the server script. In the video I run the script through NodeJS and you can see how the Chrome instance opens automatically, and after 3 seconds it starts listening for Pusher events which are logged on the terminal.

And on the client, another recording I made from my Android phone showing how it looks like to cast URLs from the Youtube app and tap on the remote control buttons that trigger actions on the server script.

What’s next?

For part 2 of this case study I want to implement some features and refactor some code:

- Implement the specific site commands event, which would require a separate section in the mobile app and another Pusher event (titled

command) listener in the server script that will call different functions depending on which site command was sent. - Add error handling to the Flutter app, showing dialogs and snackbar notifications when things go wrong or when the user tries to do something that is not allowed.

- Migrate from Pusher to Socket IO to keeps thing local and low latency. Even if I need to add a settings section to my Flutter app in which I have to manually type my (luckily static) local IP address.

- Find a way to make an existing Chrome instance coexist with a new one with remote debugging, or maybe even figure out if I can have Chromium and Chrome installed in the same operating system so I can launch Chromium for media consumption (from bed) without having to close my main browser window.

- Add features such as automatic full-screen, handling of iframe embeds, and finding other things I can control remotely using NodeJS such as the system volume itself rather than just the volume of the video I’m playing.